How to analyze data from a survey and unleash the full potential of your research

Better data leads to better decisions.

Gathering accurate data, however, is just the first step—even the highest-quality data won’t help if it’s not analyzed effectively. Whether you’re a researcher designing public health studies, a nonprofit measuring impact, or a business leader trying to understand your customers, knowing how to work with survey data is key to turning responses into meaningful insights.

In this guide, we’ll walk you through the following:

- Types of survey data to analyze

- How to analyze data

- Data analysis tools

- Best practices for data analysis

- Data interpretation

- Open-ended questions

- Mistakes to avoid

Along the way, you’ll find practical tips, tools, and techniques to help you interpret results with clarity and confidence, so you can analyze data like an expert.

Types of survey data to analyze

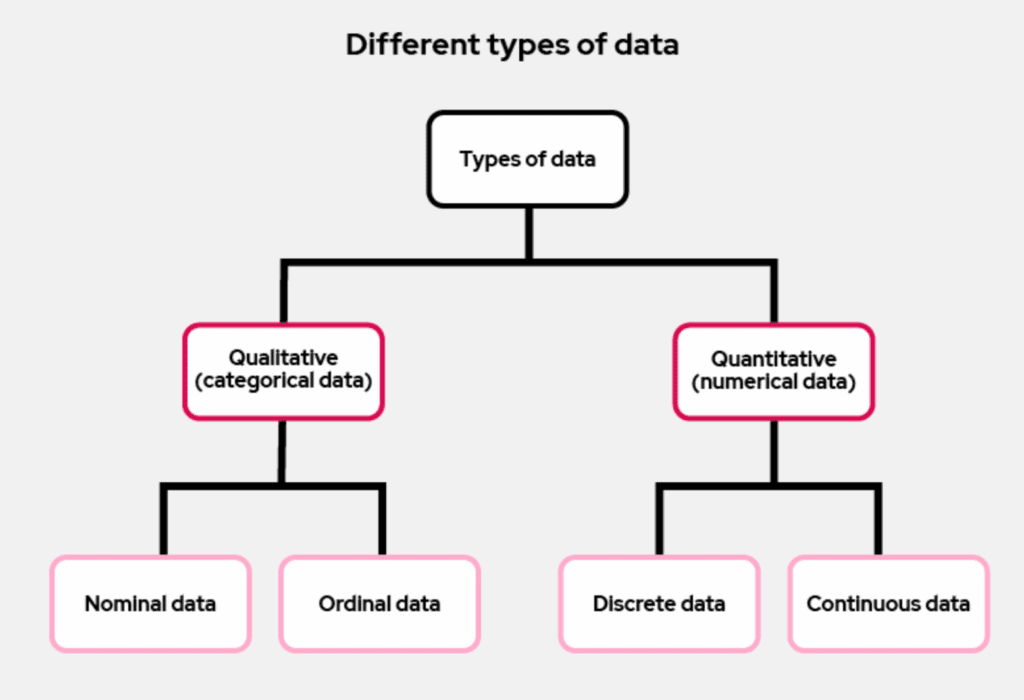

Broadly speaking, survey data falls into two categories: quantitative and qualitative. Each type of data plays a different role in your analysis and requires its own approach.

Quantitative data: The "what"

Quantitative data is numerical. It comes from closed-ended questions, such as multiple-choice or scale-based items.

This kind of data is structured, easy to tabulate, and ideal for spotting trends, conducting statistical analysis, and comparing subgroups. It can be nominal (categorical, such as gender or region) or ordinal (ordered, like satisfaction levels or likelihood to recommend).

Quantitative analysis answers questions like:

- What percentage of users are satisfied?

- How many people selected each response?

- Are there measurable differences between demographic groups?

Qualitative data: The "why"

Qualitative data is open-ended and text-based. It captures the nuance, context, and reasoning behind the numbers. Within quantitative data, it’s also helpful to distinguish between discrete data (countable values, like the number of visits) and continuous data (measurable values, like time spent on a task).

While qualitative responses are rich and insightful, they require more time and care to analyze. You might group them into themes or categories (a process known as coding), or use tools like sentiment analysis to uncover patterns.

Most surveys collect a mix of both data types.

In this guide, we’ll focus mainly on working with quantitative survey data, while also covering best practices for handling open-ended responses in a dedicated section later on.

How to analyze data from a survey

Step 1: Keep it clean

The first and arguably most important step in survey data analysis is data cleaning. Even the most sophisticated analysis techniques won’t deliver meaningful insights if your dataset is messy, incomplete, or inaccurate.

Why data cleaning matters

Raw survey data often contains incomplete responses, inconsistent formatting, or even duplicate entries. Some respondents may speed through your survey without reading the questions carefully, while others might only answer a portion before dropping off. These inconsistencies can skew your results or hide the real story your data is trying to tell.

Cleaning your data helps ensure that:

- You’re analyzing valid, high-quality responses

- Your results aren’t distorted by outliers or irrelevant data

- You can apply statistical tools effectively without errors

Build in quality from the start

Clean data starts even before the analysis stage. Tools like SurveyCTO support quality checks at the point of data collection, helping you flag suspicious patterns and monitor data quality in real-time and guide data collectors to collect more reliable data. Building these checks into your survey form reduces the burden of cleaning later and helps ensure that your analysis starts from a strong foundation.

Even with robust checks, however, you’ll want to clean your data thoroughly–here’s how:

Data cleaning steps

Here are a few key steps to guide your data cleaning process:

- Remove low-quality or incomplete responses: Drop records where only the first question is answered or where responses were submitted unusually fast compared to the overall average (a sign of rushing).

- Check for duplicates: To check for duplicates in survey data, look for repeated values in unique identifiers like email, respondent ID, or device ID using functions like COUNTIF in Excel or duplicated() in R/Python. You can also compare submission timestamps or combinations of key fields (e.g., name and birthdate) to flag nearly identical responses. In software platforms like SurveyCTO, metadata such as device or enumerator ID can help identify repeat entries.

- Standardize formatting: Make response values with common variables (e.g., “Yes”, “yes”, “YES”) consistent across entries to avoid analysis errors.

- Screen for irrelevant respondents: For example, if your survey is targeting households earning below $50,000 annually, you’ll want to exclude responses from households that report incomes above that threshold to keep your analysis focused and valid.

Step 2: Tool time

Depending on your goals, experience level, and the complexity of your dataset, there’s a wide range of tools and techniques to choose from, each with its strengths.

Choosing the right tool for the job

Your analysis tool doesn’t have to be fancy, but it should be powerful enough to help you explore your data thoroughly. Here are a few common options:

Excel or Google Sheets: Great for beginners or small datasets. Use built-in functions, filters, conditional formatting, and pivot tables to summarize and compare results.

Power BI or Tableau: Best for interactive dashboards and visual storytelling. These tools are ideal when you want to communicate insights across teams or track results over time.

Stata, SPSS, or R: Preferred for more advanced statistical analysis. These tools are commonly used in academic, research and development contexts, but are also helpful in a general business context.

Python: Offers full flexibility and is especially useful for automating repeatable analysis tasks or integrating data pipelines.

SurveyCTO users often connect their data to these platforms via integrations or automated exports, making it easy to analyze your data in whichever environment fits your workflow.

Pro tip

If you’re using SurveyCTO, check out our integrations guide for Power BI, explore our Stata commands for faster downloads and analysis, and learn about psurveycto, a Python library that streamlines the process of extracting SurveyCTO data for Python users.

Key statistical techniques to know

No matter which tool you use, applying the right techniques is key to making sense of your data. Here are a few foundational methods, when to use them, and what they help you uncover:

Descriptive statistics: Use this to get a basic summary of your data. Measures like mean, median, and standard deviation help you understand central tendencies and variability. For instance, if you’re analyzing satisfaction scores, the average score tells you how respondents generally feel, while the standard deviation shows how varied their responses are.

Cross-tabulation: Ideal for comparing how different subgroups responded. For example, you might break down satisfaction levels by gender or region. It’s often visualized as a table showing counts or percentages across two or more variables.

T-tests and ANOVA (Analysis of Variance): Use these when you want to know if the differences you observe between groups (e.g., between age groups or user types) are statistically significant, not just due to chance. For instance, a t-test compares two groups (e.g., male vs. female) and an ANOVA is used when comparing three or more groups.

Regression analysis: Use this to examine relationships between variables and identify which ones have the most influence on outcomes. For example, you could explore how factors like age, income, or satisfaction with support predict overall program satisfaction.

Conjoint analysis: Helpful when you want to understand trade-offs people make, such as which product features they value most. It’s often used in market research, product development, pricing, or policy research to prioritize attributes based on respondent choices.

Cluster or factor analysis: These are data reduction or grouping techniques. Cluster analysis helps segment your audience into groups with similar behaviors or responses (e.g., identifying different user types). Factor analysis uncovers underlying patterns by grouping correlated variables, such as collapsing multiple satisfaction items into a single “overall satisfaction” score.

These techniques go beyond basic summaries and help you uncover deeper patterns, differences, and drivers in your data.

Step 3: Five best practices for data analysis

Once your data is clean and loaded into the right tool, it’s time to dive into analysis. But before you start running regressions or creating visualizations, it’s essential to ground your work in a few key best practices to ensure your analysis is focused, accurate, and aligned with your research goals.

Define your goals first

Your analysis should always begin with a clear understanding of:

The key questions you’re trying to answer

The decisions this data informs

Whether you’re evaluating a program, testing a hypothesis, or tracking behavior over time, defining your analytical objectives upfront helps you prioritize the most relevant variables and avoid getting lost in the noise.

Summarize before you segment

Before diving into subgroup analysis or more complex statistical tests, start with basic summaries of your key variables. Look at averages, frequency counts, and response distributions.

These top-level summaries will give you a strong sense of overall patterns and can highlight early red flags, like skewed distributions or unusually high non-response rates, that may warrant further attention.

Don’t overlook metadata

If your survey platform captures metadata, such as start and end times, GPS coordinates, or enumerator IDs, consider how that information can enhance your analysis.

For example, analyzing survey duration might help you identify rushed or low-quality responses, while location data can add geographic context to your findings.

Document your steps

It’s easy to forget which filters, exclusions, or transformations you applied once you’re deep into the analysis. By documenting your data cleaning and analysis decisions, you not only make your work replicable but also reduce the risk of misinterpreting or misreporting findings later on.

Keep quality front and center

Throughout your analysis, stay alert for outliers or patterns that could indicate data quality issues, such as extreme values (like respondents listing an age of 110!) or suspicious response patterns (like having the same response to multiple questions). Also, look for inconsistencies across related questions. Revisit your cleaning steps if necessary.

Step 4: Making meaning—steps to interpret data

Once your survey data is cleaned, summarized, and analyzed with the right tools, it’s time to do the most important part: make sense of it. This is where data becomes more than just numbers—it becomes insight that can drive decisions, shape policies, and improve outcomes.

Here’s how.

Compare across subgroups

Breaking down your data by key demographics—such as gender, age, education level, income, or geographic location—can reveal important differences in perspective or experience.

For instance, you might find that overall satisfaction with a service is high, but significantly lower among rural respondents, younger participants, a certain industry, etc.

Use crosstab analysis, filters, or disaggregated visualizations to explore these subgroup differences. Just be sure your sample sizes are large enough to support reliable conclusions.

Find patterns, trends, and surprises

Start by reviewing the big picture. Are there clear trends or standout findings in the data? Do certain responses cluster together across questions or demographics? Identifying patterns, such as higher satisfaction in certain regions or consistent issues raised by a specific age group, can help point to areas of success or improvement.

At the same time, keep an eye out for unexpected results. Surprising findings may reveal new opportunities, point to overlooked challenges, or indicate where further investigation is needed.

Challenge your assumptions

It’s easy to interpret data through the lens of what you expect to find. Be deliberate about checking your assumptions and exploring alternate explanations for your results.

Are you mistaking correlation for causation? Could cultural or contextual factors be influencing responses? Stepping back and critically examining your initial interpretations can help you avoid bias and uncover deeper meaning in your findings.

Consider the time dimension

Think about whether your survey data represents a single moment in time (cross-sectional) or part of a longer trend (longitudinal). If you’ve run similar surveys in the past, compare current results to previous data to spot shifts or long-term patterns.

For example, have satisfaction scores steadily improved since a new initiative was launched? Has awareness of a policy changed over time?

If you don’t yet have historical data, consider setting a baseline now so future surveys can measure change more effectively.

Considerations for open-ended questions

While much of survey analysis focuses on numbers, open-ended questions offer something equally valuable: context, nuance, and the “why” behind the data. These free-text responses can reveal unexpected insights, highlight edge cases, or clarify confusing trends in the quantitative results.

But unlike structured responses, open-ended data requires a different approach to be useful in analysis.

Here’s how SurveyCTO partner and power user Laterite describes their approach:

"At Laterite, we have used large language models (LLMs) to analyze open-ended survey responses. First, we extract key data points such as unique IDs, open-ended responses and relevant indicators (e.g., gender, location) from our survey data, while removing any personally identifiable information (PII) manually – ensuring we don’t upload any PII to an LLM. Then, we share the open-ended responses to an LLM with detailed instructions to streamline the process of classifying responses into categories or sentiments. This allows for systematic analysis – while retaining human oversight – and makes it easy to integrate back into the original dataset for further analysis by the team."

John DiGiacomo, Director of Analytics at Laterite.

Why open-ended responses matter

Open-text feedback gives respondents the opportunity to speak in their own words, often revealing motivations, concerns, or praise that structured options might miss. A simple comment like “The process was confusing” or “I wish this service were offered in my language” can carry more meaning than a number on a scale.

These responses are particularly helpful when:

Clarifying why someone gave a low (or high) rating

Understanding outliers or unexpected patterns in the data

Exploring emerging themes not anticipated during survey design

How to analyze open-ended data

To make sense of open-ended responses, start by organizing them using a process called thematic coding. This involves grouping similar responses under categories (or “codes”) that represent common themes or ideas.

5 steps to get started:

Read through all responses to get a general sense of the themes.

Create codes for recurring topics (e.g., “confusing interface,” “long wait times,” “helpful support”).

Tag each response with one or more codes.

Count and compare how often each theme appears and whether certain subgroups emphasize different issues.

Visualize insights with tools like word clouds, bar charts, or grouped quotes to illustrate sentiment or patterns.

For more advanced or large-scale analysis, you can use text analysis or sentiment analysis tools to automatically categorize responses and detect emotional tone. Tools like ChatGPT and other AI-based platforms can also assist by quickly summarizing themes, suggesting codes, or classifying responses based on your criteria, saving significant time when dealing with hundreds or thousands of entries.

Don’t let rich data go to waste

Too often, open-ended responses end up in an appendix or are ignored entirely because they’re harder to analyze. But they’re often where the richest stories live. Even a handful of thoughtful quotes can make your findings more compelling—and more human.

To make these responses shine:

Highlight illustrative quotes (anonymized, if needed) in your final report.

Pair open-ended insights with related quantitative data for a fuller picture.

Share these quotes with program teams or stakeholders—they can be powerful motivators for change.

Mistakes to avoid when analyzing survey data

Even with a clean dataset and the best tools at your disposal, it’s easy to make missteps that weaken the accuracy or impact of your findings. By understanding and avoiding these common mistakes, you’ll be better equipped to draw meaningful, reliable conclusions from your survey data.

Confusing correlation with causation

Just because two variables appear related doesn’t mean one causes the other. For example, if households with higher incomes report better service satisfaction, it doesn’t necessarily mean income drives satisfaction—other factors (like location, access, or expectations) could be at play.

Be cautious in your language to avoid implying a relationship where none exists. Use phrases like “is associated with” or “is correlated with” to describe relationships without suggesting causation, unless you have clear evidence to support a causal link.

Cherry-picking data to fit a narrative

It can be tempting to highlight only the stats that support your hypothesis or make your program look good, but doing so risks missing the full story and undermining trust in your work.

Instead, present both positive and negative findings, and explore why certain results may not align with expectations. Transparency adds credibility and can lead to more meaningful action.

Analyzing too early or without checking data quality

Jumping into analysis before your data is complete—or without reviewing its quality—can lead to misleading results. Incomplete datasets, missing responses, duplicate entries, or formatting issues can all distort your findings.

Set a clear cutoff point for data collection, ensure your sample is large enough to support meaningful insights, and always clean and validate your data before analyzing it.

Not adjusting your data to reflect the population

If your survey responses don’t match the makeup of the group you’re studying—for example, if you have too many responses from one age group or region—your results might be misleading. Weighting helps correct for these imbalances so your findings better reflect the overall population.

This is especially important for large or representative surveys, and many analysis tools (like Stata, R, or SPSS) can help you apply these adjustments.

From data to decisions

Survey analysis isn’t just about crunching numbers—it’s about turning responses into actionable insights that can shape better programs, smarter policies, and stronger outcomes. Whether you’re evaluating a community health intervention or exploring public opinion on a new initiative, the value of your data depends on your ability to interpret it well.

At SurveyCTO, we believe in data that drives better decision making. That’s why we don’t stop at high-quality data collection—we support the full data collection lifecycle, from design to analysis by integrating with tools like Stata, R, Power BI, and more.

Learn more about our integrations and how they can benefit your data-related work beyond the collection stage in the resources below!

Additional Resources: